How to Stop Virtual Reality from Making You Want to Puke

I’m sitting in Gordon Wetzstein’s lab at Stanford University with a hacked-together prototype of a head-mounted display strapped to my face, using a wireless Xbox controller to manipulate a series of 3-D models: a lion, a chessboard filled with chess pieces, an espresso machine, and so on.

The images are fairly simple, run-of-the-mill models—the kind that anyone could download from the Internet. What is interesting, though, is what happens as I stare at the models, turning them with the controller so I can inspect them from different angles: I can focus on the different parts of the images at different depths as I would when gazing at something in real life, so when I look at, say, the chess pieces up close, those in the background look fuzzy, and vice versa when I focus on the pieces in the distance. And I don’t feel nauseous or dizzy like I sometimes do when I’m playing around with virtual reality, especially when looking at objects that are close to my face.

Virtual reality is on the verge of commercial availability, with consumer-geared headsets like the Oculus Rift poised for release next year (see “Oculus Shows Its First Consumer Headset, Circular Hand Controls”). Yet while the technology has improved immensely in the last couple years, there are still plenty of crucial issues to be sorted out—among them that feeling of motion sickness that some people like myself have when experiencing virtual reality, which arises from what’s known as vergence-accommodation conflict.

This conflict is what Wetzstein, an assistant professor of electrical engineering, and other researchers at Stanford are trying to solve with the headset I tried on, which they call a light field stereoscope—essentially, a device that uses a stack of two LCDs to show each eye a “light field” that makes virtual images look more natural than they typically do.

In real life, when you’re looking at something—a flower, for instance—your eyes move and the lens in each eye adjusts to bring whatever’s in front of you into focus. With stereoscopic 3-D, a technology commonly used by companies making virtual reality headsets, things gets trickier. In this case, each eye is shown a slightly different image of the same thing; your brain then combines these images to achieve a sense of depth. But since you’re looking at a flat, lit-up display in the distance and focusing on the 3-D images that appear to be in front of you, it can result in nausea and dizziness. Unless the technology improves, this could make it hard for some people to truly interact with virtual reality up close, regardless of whether they’re playing a first-person-shooter game or controlling a surgical robot.

“When you want to touch virtual objects, things that are close by, and you want to interact with them, it becomes very important,” Wetzstein says.

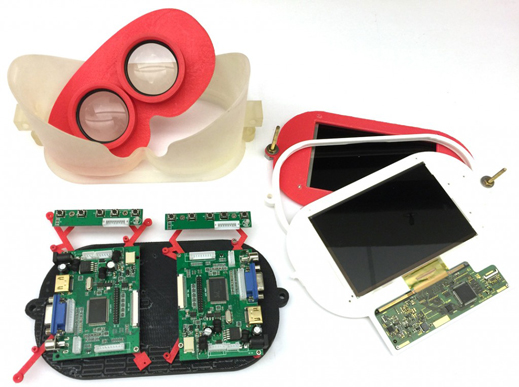

In hopes of making the stereoscopic virtual-reality experience more like what you see in real life, the Stanford researchers built a headset that contains two LCDs placed one in front of the other, with a backlight behind them, a spacer between them, and lenses in front of them. It’s connected to a computer that runs software necessary for the system to work.

The computer starts with a 3-D model, which the researchers’ software renders for each eye as a light field—in this case, Wetzstein says, it’s a five-by-five grid of slightly different 2-D images of the model, so 25 images in total for each individual eye. An algorithm uses the light fields to generate two images for each eye, and, for each eye, one of these images is shown on the rear LCD in the headset, while the other is shown on the front LCD. The images enter your pupils and are projected on your retinas.

What you see, Wetzstein says, is an approximation of the light field that’s being optically generated, which your eyes can freely move around and focus on where they want in virtual space.

“That’s normally not possible,” he says.

Wetzstein says the technology that goes into the headset is inexpensive and doesn’t require eye-tracking to figure out where the viewer is gazing, and he hopes it can be incorporated into headsets that come out several years from now. A paper about the work will be presented in August at the Siggraph computer graphics and interaction conference in Los Angeles.

Marty Banks, an optometry and vision science professor at the University of California, Berkeley, is also working on research into how to solve the problem of getting proper focus cues in virtual reality—he coauthored a paper that will also be presented at Siggraph that he says shows higher-resolution images but requires eye tracking. Banks says the display that Wetzstein and other researchers are working on is cool and clever, but notes that the resolution is still pretty low (the Stanford group’s paper indicates scenes displayed had a resolution of 640 by 800 pixels).

“That’s going to be a major thing to solve: how to get the resolution up. So that’s going to require more computation and more pixels and more expense,” he says. “There’s a ways to go there.”

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.