Facebook Will Try to Outsource a Fix for Its Fake-News Problem

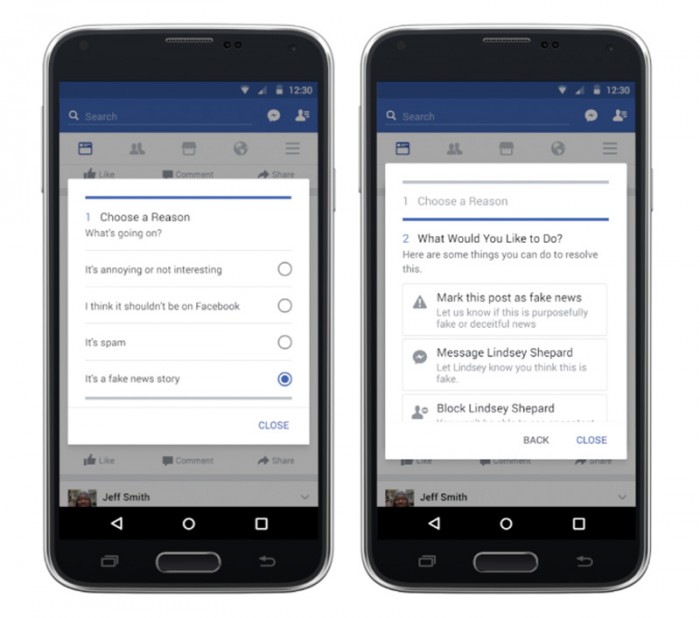

Facebook has announced a new initiative to help its users distinguish real news from fake, with a tool that neatly sidesteps any requirement for it to act as an arbiter of truth.

The social network will use a number of traits about content on its site, such as how often a post is deleted and whether comments on a post contain arguments over veracity, to weed out fake items. Suspect posts will be presented via a dashboard to fact-checking organizations Snopes, Politifact, ABC News, and FactCheck.org for analysis. If at least two of them contest facts within an article, users will see it tagged as “Disputed by 3rd Party Fact Checkers.”

That neatly absolves the social network from what is, admittedly, the incredibly tough call of whether a news item is genuine. Mark Zuckerberg has made no secret of the fact that he would rather not have Facebook making decisions about what is true and what is false. While he’s promised that more will be done, he’s also said that the issues are “complex, both technically and philosophically.”

Not that Facebook is entirely removed from the process. First, it will tweak its news feeds to suppress stories flagged as untrustworthy by the consortium of truth-seekers. And the Verge notes that employees will have to work out which posts are personal and which are purporting to be news. Facebook says that its employees won’t have to pass judgment on content, though it remains to be seen how easy it is to neatly divide between personal updates and news.

Regardless of how easy it is, it seems we need these kinds of tools. Following the furor surrounding fake news in the wake of the election, a new Pew Research Center study says that 64 percent of U.S. adults say fabricated news stories cause confusion about current affairs. What’s more, 23 percent say that they have shared a made-up news story.

Maybe next time a similar survey is carried out, those numbers will be smaller.

(Read more: Facebook, Pew Research Center, “Regardless of Its Influence on the Election, Facebook Needs to Change,” “Facebook’s Fake-News Ad Ban Is Not Enough,” “Facebook’s Content Blocking Sends Some Very Mixed Messages”)

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.