AI could get 100 times more energy-efficient with IBM’s new artificial synapses

Neural networks are the crown jewel of the AI boom. They gorge on data and do things like transcribe speech or describe images with near-perfect accuracy (see “10 breakthrough technologies 2013: Deep learning”).

The catch is that neural nets, which are modeled loosely on the structure of the human brain, are typically constructed in software rather than hardware, and the software runs on conventional computer chips. That slows things down.

IBM has now shown that building key features of a neural net directly in silicon can make it 100 times more efficient. Chips built this way might turbocharge machine learning in coming years.

The IBM chip, like a neural net written in software, mimics the synapses that connect individual neurons in a brain. The strength of these synaptic connections needs to be tuned in order for the network to learn. In a living brain, this happens in the form of connections growing or withering over time. That is easy to reproduce in software but has proved infuriatingly difficult to achieve with hardware, until now.

The IBM researchers demonstrate the microelectronic synapses in a research paper published in the journal Nature. Their approach takes inspiration from neuroscience by using two types of synapses: short-term ones for computation and long-term ones for memory. This method “addresses a few key issues,” most notably low accuracy, that have bedeviled previous efforts to build artificial neural networks in silicon, says Michael Schneider, a researcher at that National Institute of Standards and Technology who is researching neurologically inspired computer hardware.

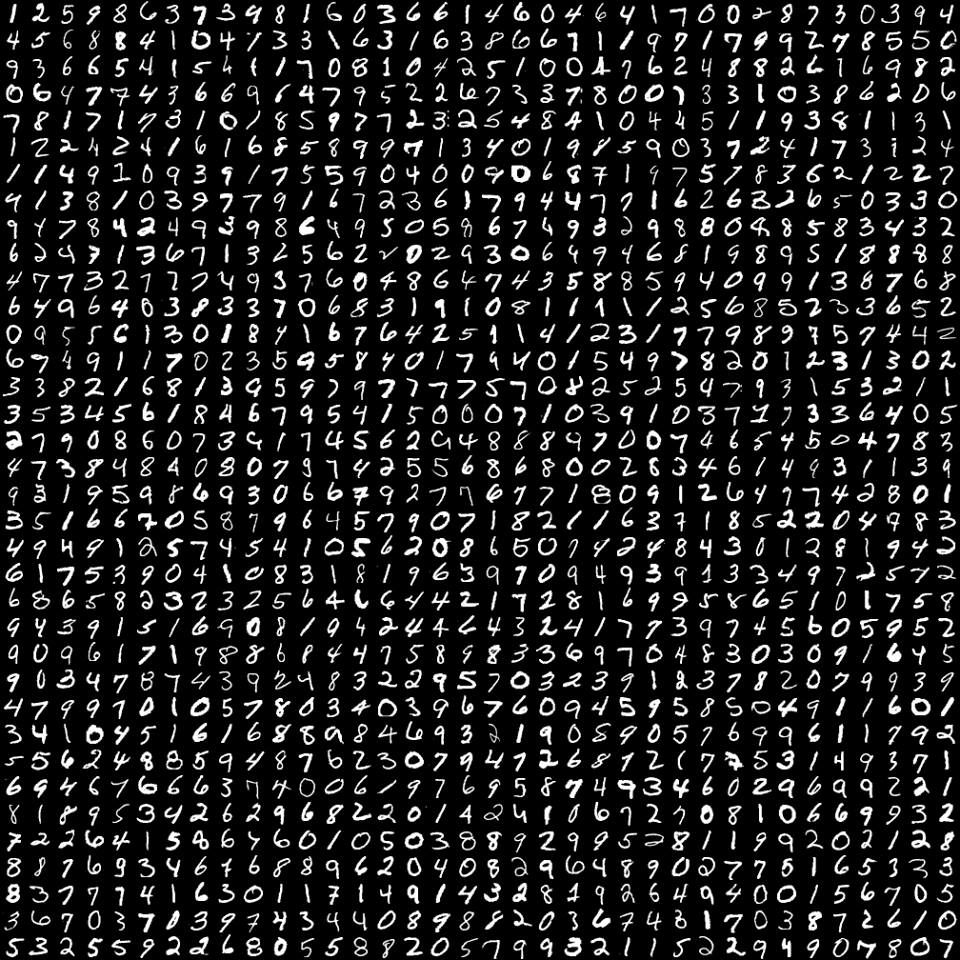

The researchers tested a neural network built from the components of two simple image-recognition tasks: handwriting and color image classification. They found the system to be as accurate as a software-based deep neural network even though it consumed only 1 percent as much energy.

The discovery isn’t only important for AI. If it scales to commercial production, it could vindicate a big bet IBM has been making. Although the company doesn’t sell computer chips these days, it has been investing in efforts to reinvent computer hardware, hoping that fundamentally new types of microelectronic components might help provide impetus for the next big advances. This new technique could be a first step, making machine learning more efficient and easier to deploy on small devices like smartphones.

“A factor of 100 in energy efficiency and in training speed for fully connected layers certainly seems worth further effort,” says Schneider. Not everyone is convinced, however. Kwabena Boahen, who researches computer architectures at Stanford, says the work reminds him of the hype surrounding “memristors,” a tunable type of transistor somewhat analogous to a synapse, which has been underdevelopment for over a decade.

The design of IBM’s chips is also still relatively clunky, consisting of five transistors and three other components where there would be a single transistor on a normal chip. Some aspects of the system, moreover, have so far been tested only in simulation, a common technique for validating microchip designs. IBM will still need to build and test a complete chip. Nevertheless, the work may be a significant, biologically inspired step toward a computer with AI logic burned into its core.

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.