The US military wants to teach AI some basic common sense

Wherever artificial intelligence is deployed, you will find it has failed in some amusing way. Take the strange errors made by translation algorithms that confuse having someone for dinner with, well, having someone for dinner.

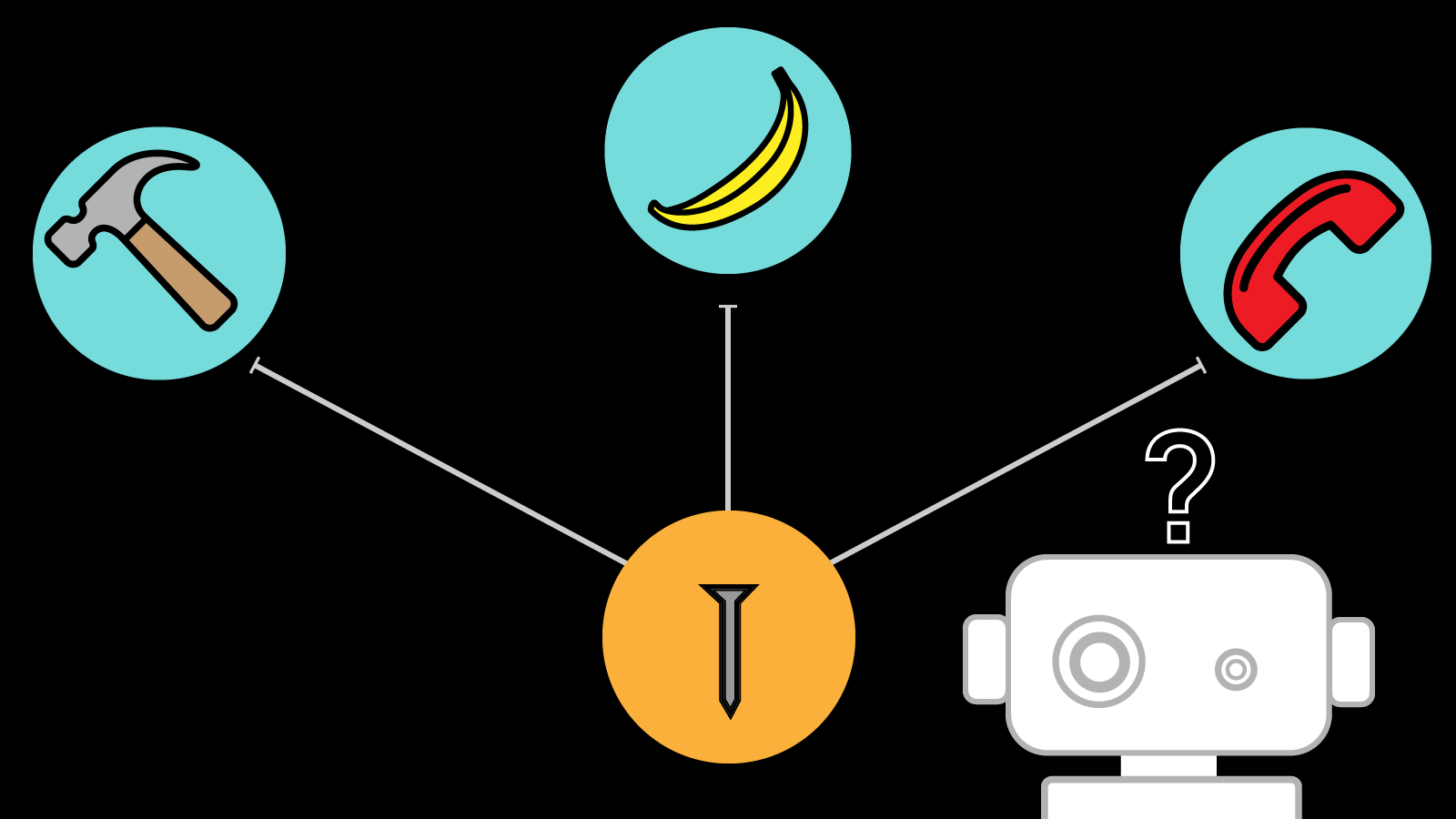

But as AI is used in ever more critical situations, such as driving autonomous cars, making medical diagnoses, or drawing life-or-death conclusions from intelligence information, these failures will no longer be a laughing matter. That’s why DARPA, the research arm of the US military, is addressing AI’s most basic flaw: it has zero common sense.

“Common sense is the dark matter of artificial intelligence,” says Oren Etzioni, CEO of the Allen Institute for AI, a research nonprofit based in Seattle that is exploring the limits of the technology. “It’s a little bit ineffable, but you see its effects on everything.”

DARPA’s new Machine Common Sense (MCS) program will run a competition that asks AI algorithms to make sense of questions like this one:

A student puts two identical plants in the same type and amount of soil. She gives them the same amount of water. She puts one of these plants near a window and the other in a dark room. The plant near the window will produce more (A) oxygen (B) carbon dioxide (C) water.

A computer program needs some understanding of the way photosynthesis works in order to tackle the question. Simply feeding a machine lots of previous questions won’t solve the problem reliably.

These benchmarks will focus on language because it can so easily trip machines up, and because it makes testing relatively straightforward. Etzioni says the questions offer a way to measure progress toward common-sense understanding, which will be crucial.

Tech companies are busy commercializing machine-learning techniques that are powerful but fundamentally limited. Deep learning, for instance, makes it possible to recognize words in speech or objects in images, often with incredible accuracy. But the approach typically relies on feeding large quantities of labeled data—a raw audio signal or the pixels in an image—into a big neural network. The system can learn to pick out important patterns, but it can easily make mistakes because it has no concept of the broader world.

In contrast, human babies quickly develop an intuitive understanding of the world that serves as a foundation for their intelligence.

It is far from obvious, however, how to solve the problem of common sense. Previous attempts to help machines understand the world have focused on building large knowledge databases by hand. This is an unwieldy and essentially never-ending task. The most famous such effort is Cyc, a project that has been in the works for decades.

The problem may prove hugely important. A lack of common sense, after all, is disastrous in certain critical situations, and it could ultimately hold artificial intelligence back. DARPA has a history of investing in fundamental AI research. Previous projects helped spawn today’s self-driving cars as well as the most famous voice-operated personal assistant, Siri.

“The absence of common sense prevents an intelligent system from understanding its world, communicating naturally with people, behaving reasonably in unforeseen situations, and learning from new experiences,” Dave Gunning, a program manager at DARPA, said in a statement issued this morning. “This absence is perhaps the most significant barrier between the narrowly focused AI applications we have today and the more general AI applications we would like to create in the future.”

Deep Dive

Artificial intelligence

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

What’s next for generative video

OpenAI's Sora has raised the bar for AI moviemaking. Here are four things to bear in mind as we wrap our heads around what's coming.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.