Should a self-driving car kill the baby or the grandma? Depends on where you’re from.

In 2014 researchers at the MIT Media Lab designed an experiment called Moral Machine. The idea was to create a game-like platform that would crowdsource people’s decisions on how self-driving cars should prioritize lives in different variations of the “trolley problem.” In the process, the data generated would provide insight into the collective ethical priorities of different cultures.

The researchers never predicted the experiment’s viral reception. Four years after the platform went live, millions of people in 233 countries and territories have logged 40 million decisions, making it one of the largest studies ever done on global moral preferences.

A new paper published in Nature presents the analysis of that data and reveals how much cross-cultural ethics diverge on the basis of culture, economics, and geographic location.

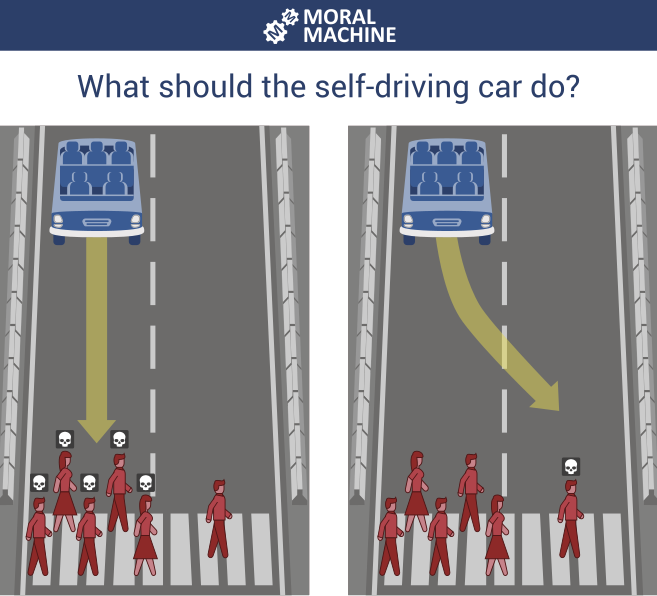

The classic trolley problem goes like this: You see a runaway trolley speeding down the tracks, about to hit and kill five people. You have access to a lever that could switch the trolley to a different track, where a different person would meet an untimely demise. Should you pull the lever and end one life to spare five?

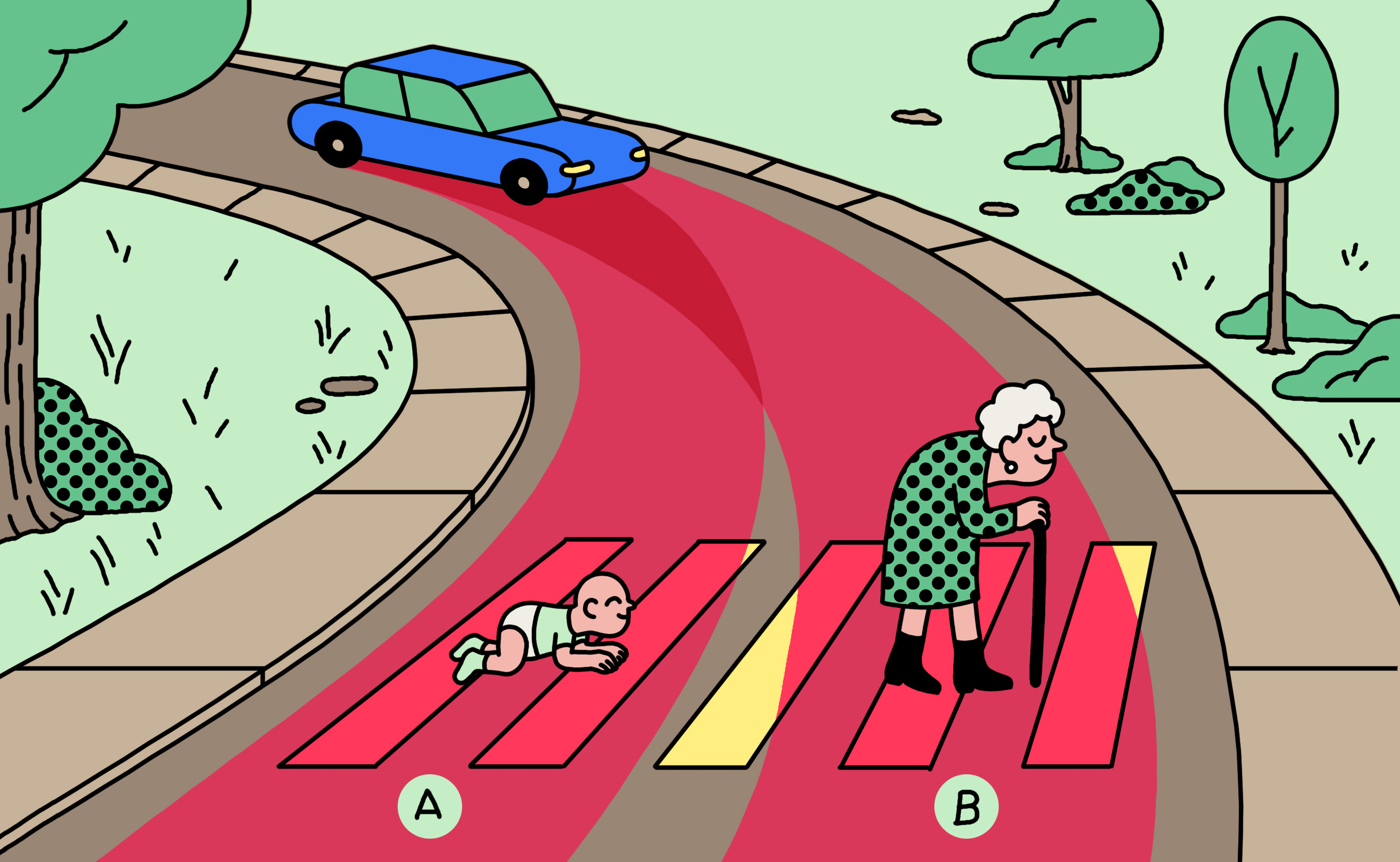

The Moral Machine took that idea to test nine different comparisons shown to polarize people: should a self-driving car prioritize humans over pets, passengers over pedestrians, more lives over fewer, women over men, young over old, fit over sickly, higher social status over lower, law-abiders over law-benders? And finally, should the car swerve (take action) or stay on course (inaction)?

Rather than pose one-to-one comparisons, however, the experiment presented participants with various combinations, such as whether a self-driving car should continue straight ahead to kill three elderly pedestrians or swerve into a barricade to kill three youthful passengers.

The researchers found that countries’ preferences differ widely, but they also correlate highly with culture and economics. For example, participants from collectivist cultures like China and Japan are less likely to spare the young over the old—perhaps, the researchers hypothesized, because of a greater emphasis on respecting the elderly.

Similarly, participants from poorer countries with weaker institutions are more tolerant of jaywalkers versus pedestrians who cross legally. And participants from countries with a high level of economic inequality show greater gaps between the treatment of individuals with high and low social status.

And, in what boils down to the essential question of the trolley problem, the researchers found that the sheer number of people in harm’s way wasn’t always the dominant factor in choosing which group should be spared. The results showed that participants from individualistic cultures, like the UK and US, placed a stronger emphasis on sparing more lives given all the other choices—perhaps, in the authors' views, because of the greater emphasis on the value of each individual.

Countries within close proximity to one another also showed closer moral preferences, with three dominant clusters in the West, East, and South.

The researchers acknowledged that the results could be skewed, given that participants in the study were self-selected and therefore more likely to be internet-connected, of high social standing, and tech savvy. But those interested in riding self-driving cars would be likely to have those characteristics also.

The study has interesting implications for countries currently testing self-driving cars, since these preferences could play a role in shaping the design and regulation of such vehicles. Carmakers may find, for example, that Chinese consumers would more readily enter a car that protected themselves over pedestrians.

But the authors of the study emphasized that the results are not meant to dictate how different countries should act. In fact, in some cases, the authors felt that technologists and policymakers should override the collective public opinion. Edmond Awad, an author of the paper, brought up the social status comparison as an example. “It seems concerning that people found it okay to a significant degree to spare higher status over lower status,” he said. “It's important to say, ‘Hey, we could quantify that’ instead of saying, ‘Oh, maybe we should use that.’” The results, he said, should be used by industry and government as a foundation for understanding how the public would react to the ethics of different design and policy decisions.

Awad hopes the results will also help technologists think more deeply about the ethics of AI beyond self-driving cars. “We used the trolley problem because it’s a very good way to collect this data, but we hope the discussion of ethics don’t stay within that theme,” he said. “The discussion should move to risk analysis—about who is at more risk or less risk—instead of saying who’s going to die or not, and also about how bias is happening.” How these results could translate into the more ethical design and regulation of AI is something he hopes to study more in the future.

“In the last two, three years more people have started talking about the ethics of AI,” Awad said. “More people have started becoming aware that AI could have different ethical consequences on different groups of people. The fact that we see people engaged with this—I think that that's something promising.”

Deep Dive

Policy

Is there anything more fascinating than a hidden world?

Some hidden worlds--whether in space, deep in the ocean, or in the form of waves or microbes--remain stubbornly unseen. Here's how technology is being used to reveal them.

A brief, weird history of brainwashing

L. Ron Hubbard, Operation Midnight Climax, and stochastic terrorism—the race for mind control changed America forever.

What Luddites can teach us about resisting an automated future

Opposing technology isn’t antithetical to progress.

Africa’s push to regulate AI starts now

AI is expanding across the continent and new policies are taking shape. But poor digital infrastructure and regulatory bottlenecks could slow adoption.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.